So Ed sent an email which explained the math around calculating variance. That's useful, but probably overkill for our purposes but it's worth going over why.

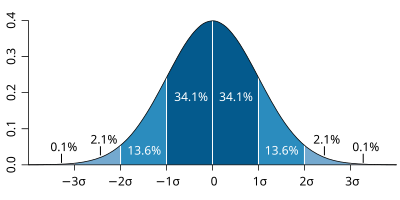

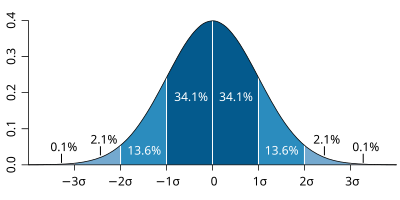

In fact, what we are trying to do is well understood via "Standard Deviation." Everyone is familiar with Standard Deviation. In charts it looks like this:

If we take this bell curve and put the earliest date a task could possibly be completed as the point 3 standard deviations to the left and the longest a task could possibly take as the point 3 standard deviations to the right, we would have a risk assessment showing a range of likely completion dates for our task assuming it's risk follows a natural bell curve (as we'll see in a moment that the hump for most software tasks is to the right of center).

All things being equal, if you estimated a completion date for a task as right in the middle, you would have 50% chance of being right and a 34.1% to 84.1% chance of finishing within 1 standard deviation of the middle (median) date. How many days or hours is that? Well, that depends on the width of the graph which is found by estimating the earliest possible start date and putting it on the left of the graph and the longest possible completion date on the right. Then draw your bell curve between the two. Simple.

By the way, as Tom DeMarco points out in his excellent (and short) book, Waltzing with Bears, a developer's first guess - their instinctual feel - for how long a task will take maps very well with the quickest a task can be completed (the date on the left 3 standard deviations from center). There are a lot of reasons for this, the primary probably being that we are asked for this number when we know the least we'll ever know about a task. It's fairly easy to envision a solution and somewhat more difficult to see what will get in the way of crafting that solution.

Here's a funny tale that illustrates what I mean when I say the quickest or earliest date is your first instinct. Had our adventurers estimated the LONGEST their trip could take, they would have asked themselves an entirely different set of questions. Instead, they were naively optimistic and forged ahead. The results are a hilarious tale of estimation gone wrong:

Why are software development task estimations regularly off by a factor of 2-3?

So here are some flavors of risk curves just mocked up on a white board. The orange tick marks represent where I think the standard deviations fall but we're not trying to be super accurate here.

Let's name them:

This graph is slightly weighted to the left. If the earliest we could finish is 7 days and the maximum is 17 days, then to say we will be done in 10 days is skewing the peak to the left. This is the way that most people estimate - with a left leaning bias. This may be the most common, but it also sets you up for lots of disappointment because our estimate will, on average, be wrong.

Now lets compare it to the way that most people complete the task. Most people complete the tasks weighted toward the right, or the third curve down.

To fit the third graph (or even the first) we are forced to rethink our estimate. If 7 days is the earliest possible and 17 the longest possible, then the middle would be 12 days. If we draw the third curve down, we're going to get something closer to 13 days or 14 days.

What's interesting about this is we haven't changed anything except the curve applied to the original data. Essentially we went from being optimistic to being normal weighted. I think for most projects the risk curve is shifted a bit to the left during estimation, but to the right when actually doing the work. In my experience tasks finish well before the longest possible time but just to the right of the median given by a standard bell curve.

Because tasks finish slightly long and are shifted to the right, the third chart down is the one that represents the most likely outcome, and the curve which I believe represent the "normal" (ie, most common) path of working on a task. The odds of running long seem to outweigh slightly the odds of running short. Probably by about 1 to 1/2 of a standard deviation if you were to plot a natural bell curve.

I'll wrap this up with one more point about: padding. Suppose you are working with a Project Manager (PM). They ask "How long will this take?" You think, I can do it in 7 days. Being clever, you think I'll pad the answer and say 10 days. After all, that's 42% padding (3/7=.42).

You see this a lot built into spreadsheets like the ones they use at my office that add padding for low, medium, and high risk. But now we'll see why this is inadequate for anything but a short (1-2 month project).

A quick glance at the chart above shows that you've only got about a 20% chance of finishing on time if your estimate is 10 days. Why? Because you didn't factor in all the data - in fact, you only used the earliest possible date and a random amount of padding. That's not very professional - it's amateurish in fact, albeit very common.

The 7 days your instinct and experience tells you the task will take most likely represents the "nano-percent" date - indicated on my charts with a capital "N". There's no reason to pad 42% or 50% or even 200%. Those are all just made up amounts of extra time created by not understanding the nature of task work. (In most cases where they do this they will tell you it's based on historical data, but you will never see or be shown this historical data. They are simply lying. Perhaps, years ago, someone told someone it was historical, but now it's bullshit.)

Instead, what you can do is estimate the *most* amount of time the task can take. This is a different question and asking this question will help you think differently about the answer.

Remember, we are not trying to come up with padding. There is a high probability that you will eat up your padding. Padding is just another way of lying. If lying is too strong for you, then ask yourself how you would defend your "padding?" Would you be able to reasonable defend it? Do you have the historic data from these developers to show what you're claiming? Unlikely. No, you'd be spooling some super bullshit - and worse yet, you'd be offended when called on it. Ha - how distorted is that.

I can defend both the earliest date I think something could be done and the longest date I think it could possibly take to develop. The earliest is the date your experience tells you the thing will take if all the breaks go your way, if every hour is productive and nothing hidden pops up. That dream sequence won't happen, but if it did you could finish the task on the earliest completion date.

The longest is the time that it would take if every conceivable thing went wrong and if every issue that could arise did. There is almost no chance you'll get to this maximum date, but most developers can easily estimate this date. After all, it should represent the time at which you'd give up on or the date on which the feature would be pulled or cancelled. This date should not be a super extreme, but a fairly reasonable imagining of "the longest it could take." No one is interested in an estimate of "When hell freezes over." It should be just as well considered and thoughtful as the earliest completion date. These are still estimates after all and you create estimates by drawing on your experience and the project data.

Now back to the conversation with the PM. They ask, "How long will this take?" You say, "No longer than 17 days - that's for sure." The PM will be nodding for sure, if not outright freaking out. They will say, "Hey! No shit it won't take longer than 17 days - if it took that long I'm sure we'd send the work offshore." (Forgive them for they know not what they do.) So you answer, "Yep, that's exactly how I came up with 17 days. I think this is a task with a normal amount of risk associated with it and there is no chance we could get it done any sooner than 7 days. So, I'm giving you an estimate that the task will take between 7 and 17 days with the most likely delivery being on day 13 or 14."

They are not going to like this answer. Tell them, "That's my answer. You want a different answer then, '...you make something up,' but remember, I'm committed to my answer and feel that's a quality estimate."

I've rarely seen a PM that didn't accept that answer. The couple of times that they didn't it blew up in their face and I was first in line to remind them of their poor choices. If this is about learning, those are "learning moments." If you don't feel comfortable rubbing it it, at least lament the poor choice.

One common response is for the PM to sit and listen to everything you say and just hear, "7 days." I agree this is a problem and I believe that to combat it all of your communication needs to include all of the data. This is why the tooling needs to support it and why you need to use all the data in the retrospectives and information radiators. Visibility will cure this problem. Even if the PM publishes the short end of your estimate, you are free to publish the entire range of dates and to move forward reasonably.

So there you go. A practical example of how you might feel something was a 7 day task, then be led astray by "padding" end up lying to everyone and claiming you can do it in 10 days, not really based on anything. And a practical example of how you might feel something was a 7 day task but consider that for certain it'll get done in 17 days, apply a risk assessment curve and come up with an entirely different estimate that I think you would be able to defend - 50% chance of 13 days, 65% chance of 14 days and 80% chance of 15 days.

To be clear, as a professional programmer you have several objectives. One is to clearly communicate what you know. You DON'T know how long any programming task will take ahead of time - that would be fortune telling, not estimating. What you can guess with reasonable certainty is the nano-percent date and the one hundred percent date.

You also should have a really good feel for whether the thing is something you've done before, something that is challenging, or something that is literally a copy of another feature you just did. Each of these would determine a clear choice for the risk curve to apply to the delivery date range. All things being equal, you can just apply the natural bell curve and things will tend to fall along that probability curve when they have a natural distribution.

What you want to avoid is the implication that there is a "single delivery date." The PMs I've worked with are used to getting answers like, I'm 80% sure we can have that by Friday. I don't know if they hear that, but that's what I say. You should say that too.

The question is probably rolling around in your head, "Is this worth the effort?" First, it's very little effort. It's certainly a lot less effort than the outcome of not doing it. So the answer is, "Yes, it's worth it."

If you think this is hard, think of all the time you spend going back and forth with the PM/TDM over deadlines and timelines. We need to find a better way to work and this is one step in that direction. Giving estimates as a probability ranges changes the nature of the dialog and brings it back into alignment with reality. It simultaneously communicates expectations and the reasoning for those expectations. Your expectations are that you'll finish between these dates with the probability of finishing on any date determined by a glance at the curve, and the reason for that particular curve is you think this item is "low risk," "high risk" or "normal risk."

There is an even more important reason why we should care. With the proper tooling, this form of estimating would help you focus on improving velocity.

And that is, after all, how Agile teams get better.

In fact, what we are trying to do is well understood via "Standard Deviation." Everyone is familiar with Standard Deviation. In charts it looks like this:

If we take this bell curve and put the earliest date a task could possibly be completed as the point 3 standard deviations to the left and the longest a task could possibly take as the point 3 standard deviations to the right, we would have a risk assessment showing a range of likely completion dates for our task assuming it's risk follows a natural bell curve (as we'll see in a moment that the hump for most software tasks is to the right of center).

All things being equal, if you estimated a completion date for a task as right in the middle, you would have 50% chance of being right and a 34.1% to 84.1% chance of finishing within 1 standard deviation of the middle (median) date. How many days or hours is that? Well, that depends on the width of the graph which is found by estimating the earliest possible start date and putting it on the left of the graph and the longest possible completion date on the right. Then draw your bell curve between the two. Simple.

By the way, as Tom DeMarco points out in his excellent (and short) book, Waltzing with Bears, a developer's first guess - their instinctual feel - for how long a task will take maps very well with the quickest a task can be completed (the date on the left 3 standard deviations from center). There are a lot of reasons for this, the primary probably being that we are asked for this number when we know the least we'll ever know about a task. It's fairly easy to envision a solution and somewhat more difficult to see what will get in the way of crafting that solution.

Here's a funny tale that illustrates what I mean when I say the quickest or earliest date is your first instinct. Had our adventurers estimated the LONGEST their trip could take, they would have asked themselves an entirely different set of questions. Instead, they were naively optimistic and forged ahead. The results are a hilarious tale of estimation gone wrong:

Why are software development task estimations regularly off by a factor of 2-3?

Let's name them:

- Low Risk.

- Done it before.

- Never done it.

- Super easy.

- No idea.

The first curve is called low risk because it shows a normal distribution. We know from all of development history, that for anything interesting the third graph down is probably the most likely. If you had done it before, you would copy - paste - cleanup. That would be the second curve down or the fourth.

So, let's take a minute and look at the third curve down. What does it really tell us? Let's use an example.

Let's say we've estimated that a feature will take 10 days to develop using the "seat of our pants" method of estimating. By that, I mean we were asked, "How long will this take?" and we guessed.

If we are optimistic, we might think, "well if I really get a jump on this I could finish it in 7 or 8 days but 10 days sounds reasonable. Then we might create an estimate that looks like this (for augment sake we are putting 10 days as the median):

So, let's take a minute and look at the third curve down. What does it really tell us? Let's use an example.

Let's say we've estimated that a feature will take 10 days to develop using the "seat of our pants" method of estimating. By that, I mean we were asked, "How long will this take?" and we guessed.

If we are optimistic, we might think, "well if I really get a jump on this I could finish it in 7 or 8 days but 10 days sounds reasonable. Then we might create an estimate that looks like this (for augment sake we are putting 10 days as the median):

This graph is slightly weighted to the left. If the earliest we could finish is 7 days and the maximum is 17 days, then to say we will be done in 10 days is skewing the peak to the left. This is the way that most people estimate - with a left leaning bias. This may be the most common, but it also sets you up for lots of disappointment because our estimate will, on average, be wrong.

Now lets compare it to the way that most people complete the task. Most people complete the tasks weighted toward the right, or the third curve down.

To fit the third graph (or even the first) we are forced to rethink our estimate. If 7 days is the earliest possible and 17 the longest possible, then the middle would be 12 days. If we draw the third curve down, we're going to get something closer to 13 days or 14 days.

What's interesting about this is we haven't changed anything except the curve applied to the original data. Essentially we went from being optimistic to being normal weighted. I think for most projects the risk curve is shifted a bit to the left during estimation, but to the right when actually doing the work. In my experience tasks finish well before the longest possible time but just to the right of the median given by a standard bell curve.

Because tasks finish slightly long and are shifted to the right, the third chart down is the one that represents the most likely outcome, and the curve which I believe represent the "normal" (ie, most common) path of working on a task. The odds of running long seem to outweigh slightly the odds of running short. Probably by about 1 to 1/2 of a standard deviation if you were to plot a natural bell curve.

I'll wrap this up with one more point about: padding. Suppose you are working with a Project Manager (PM). They ask "How long will this take?" You think, I can do it in 7 days. Being clever, you think I'll pad the answer and say 10 days. After all, that's 42% padding (3/7=.42).

You see this a lot built into spreadsheets like the ones they use at my office that add padding for low, medium, and high risk. But now we'll see why this is inadequate for anything but a short (1-2 month project).

A quick glance at the chart above shows that you've only got about a 20% chance of finishing on time if your estimate is 10 days. Why? Because you didn't factor in all the data - in fact, you only used the earliest possible date and a random amount of padding. That's not very professional - it's amateurish in fact, albeit very common.

The 7 days your instinct and experience tells you the task will take most likely represents the "nano-percent" date - indicated on my charts with a capital "N". There's no reason to pad 42% or 50% or even 200%. Those are all just made up amounts of extra time created by not understanding the nature of task work. (In most cases where they do this they will tell you it's based on historical data, but you will never see or be shown this historical data. They are simply lying. Perhaps, years ago, someone told someone it was historical, but now it's bullshit.)

Instead, what you can do is estimate the *most* amount of time the task can take. This is a different question and asking this question will help you think differently about the answer.

Remember, we are not trying to come up with padding. There is a high probability that you will eat up your padding. Padding is just another way of lying. If lying is too strong for you, then ask yourself how you would defend your "padding?" Would you be able to reasonable defend it? Do you have the historic data from these developers to show what you're claiming? Unlikely. No, you'd be spooling some super bullshit - and worse yet, you'd be offended when called on it. Ha - how distorted is that.

I can defend both the earliest date I think something could be done and the longest date I think it could possibly take to develop. The earliest is the date your experience tells you the thing will take if all the breaks go your way, if every hour is productive and nothing hidden pops up. That dream sequence won't happen, but if it did you could finish the task on the earliest completion date.

The longest is the time that it would take if every conceivable thing went wrong and if every issue that could arise did. There is almost no chance you'll get to this maximum date, but most developers can easily estimate this date. After all, it should represent the time at which you'd give up on or the date on which the feature would be pulled or cancelled. This date should not be a super extreme, but a fairly reasonable imagining of "the longest it could take." No one is interested in an estimate of "When hell freezes over." It should be just as well considered and thoughtful as the earliest completion date. These are still estimates after all and you create estimates by drawing on your experience and the project data.

Now back to the conversation with the PM. They ask, "How long will this take?" You say, "No longer than 17 days - that's for sure." The PM will be nodding for sure, if not outright freaking out. They will say, "Hey! No shit it won't take longer than 17 days - if it took that long I'm sure we'd send the work offshore." (Forgive them for they know not what they do.) So you answer, "Yep, that's exactly how I came up with 17 days. I think this is a task with a normal amount of risk associated with it and there is no chance we could get it done any sooner than 7 days. So, I'm giving you an estimate that the task will take between 7 and 17 days with the most likely delivery being on day 13 or 14."

They are not going to like this answer. Tell them, "That's my answer. You want a different answer then, '...you make something up,' but remember, I'm committed to my answer and feel that's a quality estimate."

I've rarely seen a PM that didn't accept that answer. The couple of times that they didn't it blew up in their face and I was first in line to remind them of their poor choices. If this is about learning, those are "learning moments." If you don't feel comfortable rubbing it it, at least lament the poor choice.

One common response is for the PM to sit and listen to everything you say and just hear, "7 days." I agree this is a problem and I believe that to combat it all of your communication needs to include all of the data. This is why the tooling needs to support it and why you need to use all the data in the retrospectives and information radiators. Visibility will cure this problem. Even if the PM publishes the short end of your estimate, you are free to publish the entire range of dates and to move forward reasonably.

So there you go. A practical example of how you might feel something was a 7 day task, then be led astray by "padding" end up lying to everyone and claiming you can do it in 10 days, not really based on anything. And a practical example of how you might feel something was a 7 day task but consider that for certain it'll get done in 17 days, apply a risk assessment curve and come up with an entirely different estimate that I think you would be able to defend - 50% chance of 13 days, 65% chance of 14 days and 80% chance of 15 days.

To be clear, as a professional programmer you have several objectives. One is to clearly communicate what you know. You DON'T know how long any programming task will take ahead of time - that would be fortune telling, not estimating. What you can guess with reasonable certainty is the nano-percent date and the one hundred percent date.

You also should have a really good feel for whether the thing is something you've done before, something that is challenging, or something that is literally a copy of another feature you just did. Each of these would determine a clear choice for the risk curve to apply to the delivery date range. All things being equal, you can just apply the natural bell curve and things will tend to fall along that probability curve when they have a natural distribution.

What you want to avoid is the implication that there is a "single delivery date." The PMs I've worked with are used to getting answers like, I'm 80% sure we can have that by Friday. I don't know if they hear that, but that's what I say. You should say that too.

The question is probably rolling around in your head, "Is this worth the effort?" First, it's very little effort. It's certainly a lot less effort than the outcome of not doing it. So the answer is, "Yes, it's worth it."

If you think this is hard, think of all the time you spend going back and forth with the PM/TDM over deadlines and timelines. We need to find a better way to work and this is one step in that direction. Giving estimates as a probability ranges changes the nature of the dialog and brings it back into alignment with reality. It simultaneously communicates expectations and the reasoning for those expectations. Your expectations are that you'll finish between these dates with the probability of finishing on any date determined by a glance at the curve, and the reason for that particular curve is you think this item is "low risk," "high risk" or "normal risk."

There is an even more important reason why we should care. With the proper tooling, this form of estimating would help you focus on improving velocity.

And that is, after all, how Agile teams get better.

No comments:

Post a Comment